Introduction

Do you think the web is the same as in 2010 or 2015? I bet everyone will say NO. The advancement of technology and the evolution of the internet have changed the nature of the web. Unlike before, we are much more dependent on the web for our daily lives because so much data is available to make our lives easier.

With the expansion of the web, data also increased. And thus, the need to study this data increased.

Since then, it has become easy to extract valuable information from multiple websites because of a process called ‘web scraping.’ Moreover, we can also study this information as we get it in a structured format.

But even with such an easy and time-saving alternative, we still face many challenges while scraping data. Which are they? Let’s find out different web scraping challenges and the solutions ProWebScraper provides to overcome them.

Challenges while scraping data

While getting into any process, it is necessary to study its challenges first. Similarly, let’s find out the common web scraping challenges.

1. Anti-scraping mechanism

Several websites employ anti-scraping measures to prevent web scraping bots. It becomes difficult to enter the website and scrape the data then. There are several ways how these websites identify and block bots. Some of which are:

1.1 IP blocking

The websites note your IP address when you visit them. This way, it becomes easy to identify bots. So, if you keep accessing a website from the same IP address, the website will note your IP address every time. If there’s some unusual activity from this IP address, the website will immediately block the IP address to reduce the non-human traffic.

A web scraping bot would often submit considerably more queries from a single IP address when crawling a page than a human user could produce in the same amount of time. A website can track the number of queries a website receives from a particular IP address. So, websites may block an IP address or make users complete a CAPTCHA test if the volume of requests reaches a certain level.

1.2 HTTP request analysis

Many hidden data, including HTTP headers, client IP addresses, SSL/TLS versions, and a list of TLS cyphers that are supported, are included in every HTTP request that a client sends to a web server. Even the HTTP request’s structure, such as the HTTP headers’ order, can reveal whether a request is from a real web browser or a script.

Websites can scan for these indicators and reject requests that lack the signature of a recognised web browser or display a CAPTCHA.

If this happens, it again acts as a barrier to your web scraping process.

1.3 User behaviour analysis

Some websites also observe the user behaviour on the website. They monitor the pages visited, the time spent on the website, mouse movements and the user’s speed while filling a form, and much more. The website blocks the IP address or serves a captcha if the behaviour suggests some unusual activity.

1.4 Browser fingerprinting

The websites can test whether a user or robot is using the web browser. The tests search for details about your operating system, installed browser add-ons, available fonts, timezone, and browser type and version, among other things. Combined, all this information creates a browser’s “fingerprint”.

Solution:

You will have to install a proxy infrastructure if you don’t want websites to block you. It will be costly. Then what can be an easy alternative that fits your budget? You can opt for tools like ProWebScraper, which already has an inbuilt proxy infrastructure to support your needs. ProWebScraper has a pool of IP addresses, so even if one IP address gets blocked, the scraping process continues from another IP address. This way, your work doesn’t get hampered because of technical issues.

2. Large Proxy Infrastructure

Now we know the need for proxies in the web scraping process. We need them to scrape the web pages without being blocked. But how do you get so many proxies? You will need a large proxy infrastructure that can bless you with millions of proxies to hide your identity.

The biggest challenge here is the budget. The installation and set-up of proxy infrastructure are very high. So, are you ready to pay that amount to scrape the websites? Or do you want to look for a more accessible alternative?

Solution:

ProWebScraper offers you an easy solution without digging a hole in your pockets. With ProWebScraper, you get in-built proxies. So, now you don’t have to pay a heavy price for the entire set-up of the proxy infrastructure. Instead, you can easily get a ProWebScraper plan at an affordable price and let the tool take care of your proxies. With its never-ending proxies, you no longer have to worry about being blocked or hampering your scraping process.

These proxies can even escape anti-scrapers as they are built with anti-bot detection. So, a website will never be able to identify them. Additionally, these proxies have a 99% successful scraping rate from websites like Amazon, Walmart, Yelp, BestBuy, Wayfair, and more. So you can imagine its superpowers.

3. Geo Specific Scraping

Some websites often do not allow you to access their websites from certain regions. It means they have blocked the website’s accessibility for some physical addresses. Now, when you try to access the website, and it doubts that you are from a specific location that it has blocked, it will intentionally deny your request for accessing the website.

When the website offers you different content depending on where you are, that is another situation in which geo-blocking can be a problem for you. As a result, you risk losing some crucial information that could be very useful to you. But is that advisable for your business?

Solution:

ProWebScraper has proxies from more than 200 countries, making it suitable to scrape any website. So, even if any website has blocked your physical location, you know who can help you, right? Why will you restrict yourself from knowing the market’s important updates when you have ProWebScraper to fetch that information?

4. Website structure changes

Did you ever visit a website after a long time and see that the website has completely changed its structure and layout? Yes, it keeps happenings as the websites update. Is it possible for you to easily scrape these websites if so many structural changes occur occurring on the website? Your technician will get tired of developing the codes for scraping each time the website structure changes. Above all, it is also a time-consuming process. How do you overcome this challenge, then?

Solution:

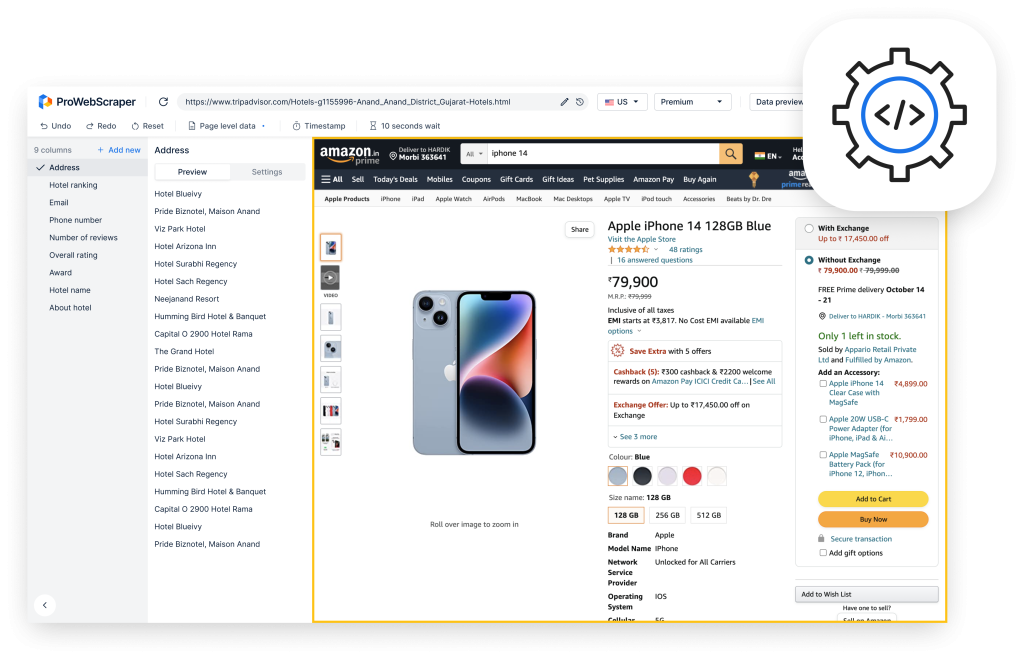

ProWebScraper can help you here as it is a no-code platform. It means you require no coding knowledge to build a scraper for you. It is the biggest advantage of ProWebScraper, as you do not require a technician to build a scraper for you. You can set up a scraper only with the help of a few clicks. And guess what? It hardly takes two minutes to set this scraper.

As you only have to point and click on the different elements of the web page to set your scraper, it also saves time.

5. Large scale/ distributed scraping.

We know that every product has variations. And the websites have different pages for all these variations. Moreover, every category may have subcategories. If you scrape these pages one by one, tell me how long it will take to scrape all the pages. I am sure you will only be wasting your day.

So, you will need a tool to save time and scrape multiple pages for you at once. It will require large-scale or distributed scraping. How do you plan to solve this problem?

Solution:

For large-scale scraping, you need scalable architecture. But when you get a ProWebScraper subscription, you already get access to its scalable architecture. It helps to scrape hundreds of websites smoothly without missing out on any information. Yes, it knows how much important this data is for you!

6. Data quality

Obtaining useful data and information is the primary aim of web scraping. As a result, it becomes a problem when you collect data, but it does not help achieve your marketing objectives. Although it can be discouraging, this is a significant barrier that web scraping requires you to overcome.

The scraper will initially collect data in an unorganised, disjointed manner. Data that is dispersed is typical since a web scraper collects information from various sources. Data duplication, unnecessary data, and even false information are risks. These are all gimmicks that won’t help you achieve your objectives.

So, do you plan to sit and give structure to this extracted data? Will you hire someone to do this for you as it’s a tedious process? None of these options is feasible.

Solution:

To avoid such issues, you can use tools like ProWebScraper, which gives you the extracted data in a structured format. You can also download this data in CSV or JSON formats and share it with your peers to make a good marketing strategy together.

You can also do this via API or cloud applications like AWS, dropbox, and more.

Do I even need to say that the duplicated data will be eliminated automatically?

Conclusion

We saw that ProWebScraper is one such tool that solves all your major web scraping challenges. Let me give you some exciting news now. Getting started with ProWebScraper is FREE! Yes, you can scrape up to 100 pages without paying a penny. So why not try your hands on this tool before spending a lot on setting up a scraping infrastructure?

For any more queries, you can always contact the ProWebScraper support team, and they will be happy to serve you.