Web Scraping is a science to fetch data from websites. The bot crawlers used to scrape the data retrieves the underlying HTML code faster than it can be done manually. But this should happen without casting detrimental effects on the site.

Web Scraping practices must be fair that should not impact the performance of the target site you are trying to get the data from.

Why do you need Top Web Scraping Best Practices?

Fair web scraping practices are relevant so that you could get data to the maximum depth that serves your purpose and helps you prepare an authentic database. Web Scraping practices prevent you from getting blocked by the target website. If in any case you fail to attain the high standard protocol for the scraping practices, you could end up with some legal complications and being blocked.

In short, if you do not wish to invite any legal consequences you would have to abide by the fair web scraping practices. But how would you know that you have been blocked?

To identify that you are being stopped to access the information from a particular website, you must observe a few patterns. Like if your crawler happens to see any of these, it implies that you have been blocked by the site:

- Unusual delay in delivering the content.

- Errors like HTTP 404, 301, or 50x that too frequently.

- HTTP errors like 301 (Moved Temporarily), 401(Unauthorized), 404 (Not found), 429 (Too Many Requests), or 503 (Service Unavailable).

- Captcha Pages before entering the page.

What are the best web scraping practices?

You may understand web scraping practices as the guidelines and actions which you may bear in mind when getting onto scraping. Here are a few things you must keep in mind as best web scraping practices:

1. Do not disturb the server often

When preparing for web scraping, you must know that not all sites allow crawlers to come in all the time. They will have some frequency set for these bots.

If you are visiting the website and its hosted server often, then chances are the traffic gets high. It could at times lead to the crashing of the server.

Hence, the best practice could be to visit the website at an interval delay of 10 minutes or more every time. You may also consider placing an order to the bot as specified in robots.txt. Both ways can prevent you from getting blocked.

2. Do as mentioned in the Robot.txt file

Robot.txt file is a that directs the search engine robots on ways how to crawl and index pages on the website. The file is made of instructions for bot crawlers which are nothing but software programs.

To get to the root of the data you want to fetch, you must first check the robot.txt file. This file can be accessed in the admin section of the website.

You can open the file to see which areas of the website the bot can crawl without restriction. Apart from the information, you must also check the frequency of crawling allowed. But if anywhere you see restrictions imposed to not crawl, you must abide by that to save yourself from serious legal implications.

3. Use Proxy Services, Keep rotating your IP Addresses

This could be one common challenge for web scraping. Not all websites allow you to visit them over and over again especially if they observe a pattern of your visit. To avert the challenge, you should use proxy services. When you are visiting a website they can see your IP address. They would know what you are doing exactly if you are here to collect the data.

Let the visiting not know that you are the same authority visiting them again and again. You can create a bank of IP addresses that you can use for sending requests. You must also remember to keep rotating your IP address. At times, the website server can block your spider crawler if it doesn’t want you there. Some ways in which you can change your outgoing IPs include:

- VPNs

- Free Proxies

- TOR

- Private Proxies which are owned by you. With this, the chances of getting blocked are rare only when the frequency of visiting is low.

- Shared Proxies are shared amongst many users. The chances of getting blocked are high in this case.

- Residential Proxies when you are making a large number of requests to a website that blocks actively. These are very expensive and hence this should be the last choice to make.

- Data Center Proxies if you need a faster and large number of proxies.

4. Think of Agent Rotation and Spoofing

Every time you request the server to access the information through the web scraper tool, it consists of a user agent string.

But do you know what a user agent string is?

A user agent string is a tool that informs the server about which browser, version, or platform you came in for the visit. If you do not have this set, the website may not allow you to enter and view their content. Using the same user-agent’s request over the target website can be risky.

The server can spot that it is the same bot that crawls over and over again. They can block you and know that it is the same crawler approaching every time. To prevent this from happening, you must rotate the user and the agent between the two requests.

Don’t know how to get your user agent? It is a simple process and for that, you need to type “what is my user-agent” in the search bar of Google. You can use different User-Agent strings that are available online. Apart from these, you can also pretend to be a Google Bot (http://www.google.com/bot/html).

Despite these tricks, if you still get blocked after using the recent user-agent string, you need to add some more request headers that may include:

- Accept

- Referrer

- Accept-Language

- Upgrade-Insecure-Requests

5. Avoid using the same crawling pattern.

Web scraping bots may have the same pattern when they crawl a website. It is simply because they are programmed to behave in that manner. At the same time, the sites that have anti-crawling mechanisms can detect these bots by observing their pattern. If they find any discrepancy, the bot can be blocked from web scraping at any time in the future.

To avoid a situation like this, you must not forget to incorporate some random clicks and mouse movements in the bot. It should look like a human is crawling the web page and not a bot.

6. Be Clear and Transparent.

It is best and wise to disclose your information if you want to gain access to the website. At times, a few websites ask you to create a login and a password to access their information. If that is the case, it is better, you tell who you are and then access the website. Do not hide who you are as otherwise, the website will block you from using their data.

7. Use a headless browser.

A real browser is important to scrape the data which can be checked if the web browser renders a block of JavaScript. The website you want to visit may deploy many bot detection tools. These tools can indicate that the browser is controlled by an automated library.

The anti-bot tools check whether :

- There exists support for nonstandard browser features.

- Any bot-specific signature is present or not.

- Any automation tool like Selenium or Playwright is present or not.

- There are any random mouse movements, scrolls, clicks, tab changes, etc.

You can use these with your headless browser to prevent your scraper from being banned.

- Fingerprint Rotation

- Patching Selenium

8. Check the website for the layouts.

Web Scraping is not easy and to prevent the activity some websites use different layouts. Understand this is a trick and you can scrape the data using XPaths and CSS selectors.

9. Do not scrape after a Login.

You must avoid scraping a website that asks you to log in. If you try getting the information after login, the server will identify that information is coming from the same IP address. Noticing this activity, the website can store your credentials and block you from scraping further.

To avoid a situation like this, imitate the ways of browsing as humans would do especially when authentication is required. It will help you get the data you want.

10. Use Captcha Solving Services.

A website you want to scrape may have applied anti-web scraping measures. In such a case, when you choose to scrape the data, instead of web pages you will get to see captcha pages. To overcome this challenge, you can use captcha solving services and get your job going.

Use Captcha solvers and get in to look into the data. Don’t worry the services are quite affordable.

11. Prefer to scrape in the slow hours.

It is wise to scrape the data when the traffic to the website, you want to visit, is less. You can know about the maximum peak hours and the major point of traffic using geolocation. This saves your request from getting rejected and improves the crawling rate.

12. Do not copy. Use the data mindfully.

Copying the data is not admissible in any business. The same is the case with web scraping as well. You cannot scrape the data and publish it for your use anywhere it is. It can be breaking copyright laws which can lead to legal issues. Better is that you check the “Terms of Service” before scraping.

Things to keep in mind when scraping at large scale

Here are a few things to keep in mind when scraping data at a large scale:

- For a large database, you can use Amazon Web Services (AWS) or Relational Database Services (RDS). AWS takes care of the data back-up and always keeps a snapshot of the data. While RDS helps to keep a structured database.

- Run the test cases to extract the logic and see whether the target website is the same or has changed.

- Use proxy services to hide your IP address from which you can visit the target website. The proxy server can rotate the IP hiding your identity and you can scrape the data.

- Use Scrapy frameworks if you are working on a larger website. It means that there will be too much data to scrape. If you do not want to waste time then you need to design your spiders carefully with the proper LinkExtractor.

- As discussed above in the best practices, you must make sure of using captchas solvers to enter the website. Captchas, as discussed above, are used by the websites to keep you away from fetching the data.

Conclusion

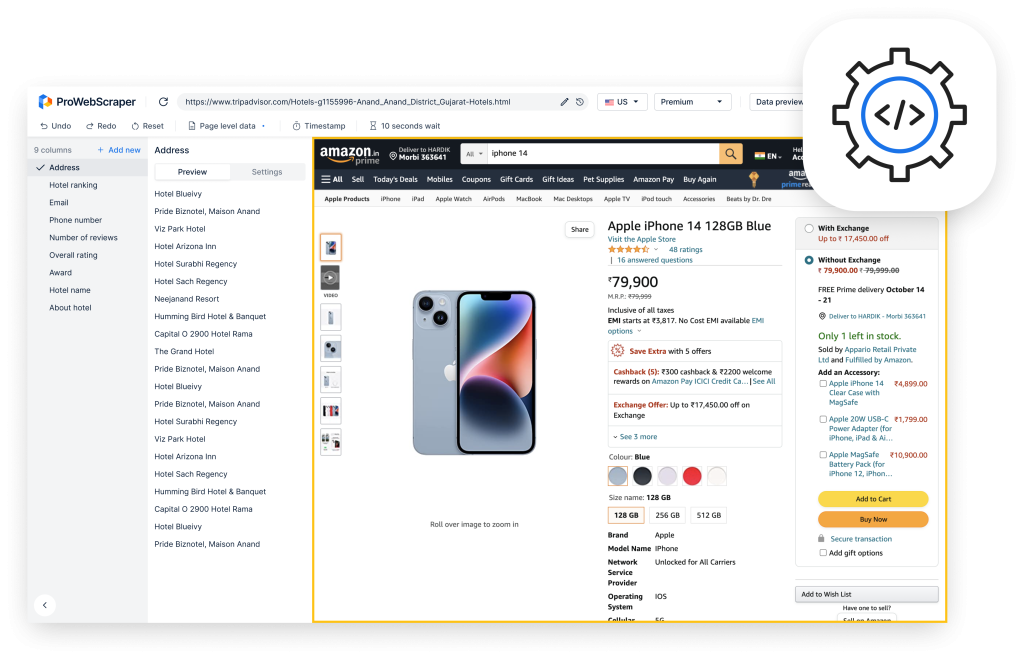

Scraping is a task that can be completed if you bear in mind the guidelines, adopt the best practices, or use the web scraping tools. ProWebScraper is one of the best web scraping tools that can be used without any challenge and knowledge of coding. A customized scraping tool will allow you to extract the data from multiple pages. Fetching the maximum data is your target and you should stick to that.

You can use ProWebScraper hassle-free as it comes with world-class customer service, free scraper set-up in 2 hours, and affordable prices. It is one tool that can assist you to scrape data from any website, that too, effortlessly. The best part is that a user like you would not require knowledge of coding and can fetch the data by just clicking on the items of interest. Using the ProWebScraper minimizes your chances of making mistakes. The scraping software makes sure that you keep the backend secure because in no case, should you disrespect the rules of the website you want to crawl.