Introduction

How do you look for data on the web? You may say you search for it. But what if I ask what do you do to get bulk data from the internet? I am certain your answer will be that you have to scrape the web.

Web scraping is a process through which we can quickly extract required data from websites.

The tools that help us in this process are web scraping tools. You may have to hire separate technicians as this process involves a lot of coding, But thanks to the technology, now we even have some no-coding web scraping tools through which any non-technician can scrape data from the websites.

One such go-to web scraping tool for any technician is Scrapy.

Scrapy

You must have heard of Scrapy for crawling a site and storing the data in a structured format. Scrapy is a top-rated tool since you can also integrate it with API.

You can build your web spiders and run them through web pages to extract the necessary data. Moreover, you can also use its Zyte Scrapy Cloud to deploy cloud services or Scrapyd if you want to host spiders yourself. Its fast and powerful approach has made it a go-to tool for anyone who wants to extract data.

People use Scrapy for:

- Web crawling

- Extracting real-time data

- Application integration

- Web development

- Mobile development

- Gather structured and unstructured data

- Lead generation

- Competitor Research

- Cross-site data integration

- User logins

- Bypassing captchas and more.

A few renowned companies like Intoli, Tryo labs, Zopper, and Offertazo also use Scrapy in their day-to-day operations.

However, there are a few limitations to this popular tool as well.

Why do we need alternatives to Scrapy?

Like every coin has two sides, Scrapy also comes with its limitations. Thus, people are still looking for alternatives to Scrapy.

Let us understand what it is in Scrapy that people are unhappy about.

1. Learn to code

As Scrapy works on coding, you have to be well-versed with the coding languages like Python. Any non-technician cannot acquire this knowledge. Hence, it becomes mandatory for a company to hire a technician who can use Scrapy for extracting data after high-level web crawling.

Any person will take nearly a month to learn these coding languages. It becomes a time-consuming task since the learning curve is high.

Moreover, you need to invest in the resources to learn Scrapy. As it works on coding, it doesn’t have an easy-to-use interface. Hence, you have to spend a high amount to learn Scrapy.

Additionally, maintaining Scrapy is also expensive. You need to purchase its different parts and integrate them with the system to make Scrapy work.

2. Deploy cloud / Difficult to scale

start with why- data processing server

Before choosing any web scraper, it is important to check its scalability. If a tool cannot scrape multiple pages simultaneously, it will become a time-consuming task. While using Scrapy, you use your local system to scrape web pages. Hence, it will only be able to scrape the number of pages that your data processing server supports. Once you reach its limit, scraping multiple pages may become an issue for you. To solve this problem, you will have to deploy cloud and increase the scalability of the tool.

For deploying the cloud, you’ll have to purchase the cloud and integrate it with Scrapy separately. It doesn’t have an inbuilt cloud platform that can help you as a one-stop solution. Hence, the entire process consumes time and effort, which the businesses find disappointing.

3. Integrate proxy to avoid blocking

Once you start scraping the web pages, your IP address gets recorded. After a point of time, due to unusual visits to the web pages through the same IP address, your scraper may not be able to access them. It is because your IP address has been blocked. But how do you still extract the data you want?

For this, you will have to use proxies. Proxies are the IP addresses of others that you use for the time being to access your required websites. By using proxies, your scraper will not get blocked anymore by the websites.

However, in Scrapy, the proxy feature doesn’t come as an in-built setting. Thus, you have to integrate proxies to Scrapy separately. It becomes an additional expense for the companies since you can only avail proxies by purchasing them from third parties.

4. Doesn’t handle JS

Scrapy is incapable of handling JavaScript on its own. Although it provides the services, it has to depend on Splash to accomplish the task for you.

Scrapy alternatives for Non-Tech Professionals

Scrapy can benefit the technicians. But what will you do if you are a non-tech person and don’t have a sufficient budget to hire a technician? Well, you can solve your problem by using Scrapy alternatives.

Yes, a few tools in the market can be used without coding. Hence, any non-technician can also scrape data from desired URLs without technical knowledge. These no-code platforms proved to be a blessing in disguise for most businesses as they regularly require data.

While there are too many alternatives for Scrapy, here is a list of the top 5 of them.

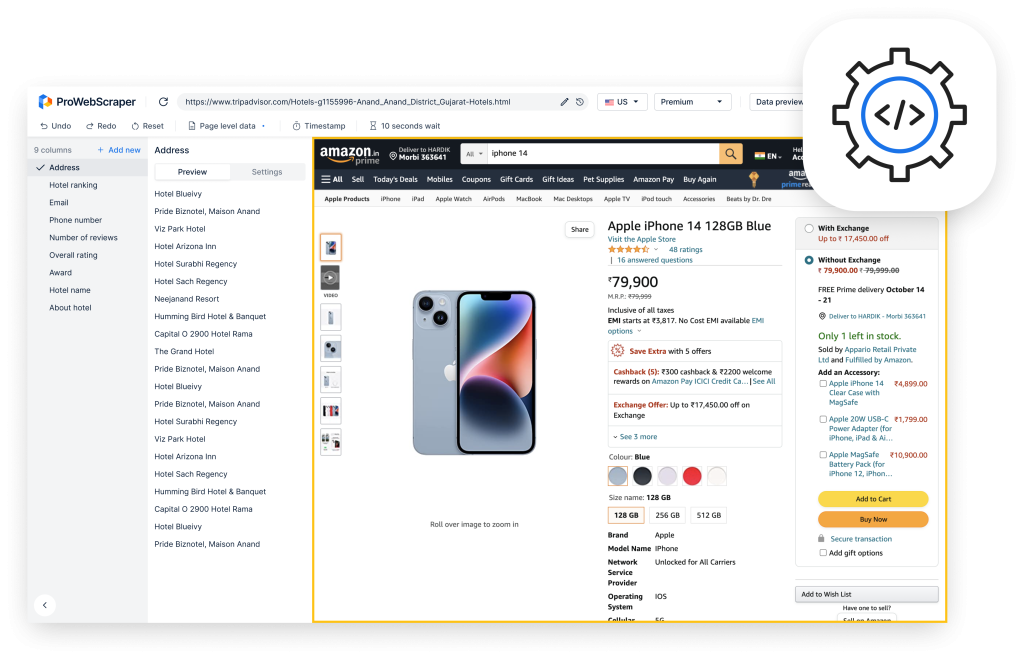

1. ProWebScraper

ProWebScraper is a no-code web scraping tool that allows you to extract data from web pages effortlessly. Its affordable and extraordinary customer services make it the preferable web scraping tool in the eyes of the consumers.

Moreover, their support team helps you to set up the web scraper for free. This feature of ProWebScraper makes it unique from its competitors because a lot of cost goes away in building and maintaining the scraper. Apart from these, it also allows you to download the extracted data into your desired formats directly.

Let us have a detailed view of the features of ProWebScraper.

No learning curve

Since it is a no-code platform, it doesn’t require you to learn programming languages for coding. Hence, the learning curve for ProWebScraper is lower than Scrapy, which saves you time.

Once you understand the tool’s interface, it is extremely easy to use this tool. Instead of coding, ProWebScraper uses the point and selection click method to scrape data from websites.

Handle dynamic websites/ JS

It is easier to scrape static websites since the data remains the same. However, most web scraping tools have limitations in dynamic websites. But, ProWebScraper can effortlessly scrape data from dynamic websites as well.Increased scalability

With the highly scalable architecture of ProWebScraper, it becomes easy for everyone to scrape data from multiple websites simultaneously. Moreover, you can scrape millions of pages daily.You can successfully scrape multiple pages at a time, even in the free version of ProWebScraper. Additionally, if you opt for their subscription, the number of pages increases.

Inbuilt proxy support

You’ll need proxies to scrape web pages. For Scrapy, you will have to purchase these proxies from third parties. But while using ProWebScraper, you no longer have to waste your time rushing to someone else and asking for proxies.ProWebScraper comes with inbuilt proxy support. It means that your scraping process will never stop because you ran out of proxies. The tool will manage your proxies and provide you with the relevant data. Doesn’t that seem like your exact requirement?

2. Parsehub

This web data extraction tool is equally popular because of its no-code feature. You can create an API or reschedule your scraping process through Parsehub. Moreover, if you want to deal with JavaScript, maps, Ajax, dropdowns, calendars, or any other element, Parsehub can help you.

The only disadvantage it has is its interface is not as user-friendly as you will find in other tools. You may have to repeat a few steps to get the desired output.

3. Octoparse

If you are looking for a SaaS web data platform that you can avail yourself of for free, you must try Octoparse. Like ProWebScraper, it also uses the point and click selection method to scrape the data from the web pages. It means that you do not need to understand coding to use this tool.

You can scrape any number of web pages without any cost while using Octoparse. Also, it enables you to have access to its cloud platform and schedule your scraping process. Do I need to mention that Octoparse also helps you with anonymous scraping?

4. Apify

Like other web scraping tools, Apify also allows you to scrape data from web pages anonymously with the help of proxies. You can also use its cloud platform to simplify the process and store data in CSV, JSON, or Excel formats.

Linking Apify with API is also not difficult. However, people sometimes face problems while handling bulk data.

5. Mozenda

Mozenda allows you to scrape web pages with excellent efficiency without the need for technicians. Being a no-code platform, any layman can use Mozenda without much learning. You can scrape different elements like text, PDF, and images from your desired URLs. After the scraping process, it also allows you to download data and organize it in a structured form to make your decisions easier.

Mozenda also allows you to integrate data through other platforms like google analytics, salesforce, shopify, etc as a cherry on the cake.

Conclusion

Thus, we understood that every tool has its own set of pros and cons. Mostly, people opt for one-stop solution tools to avoid the unnecessary headache of looking for third-party services and coordinating between them. You can decide on a tool that can fulfil your demands and is affordable to your business.

Don’t forget to let us know in the comments about your favorite web scraping tool.